Physics/A/Shannon entropy

Simple information entropy

| # coins Entropy |

# messages Omega |

|---|---|

| Table 1: More than two coins = entropy; = the number of possible messages | |

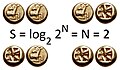

Figure 1 illustrates the fact that flipping two coins can yield four get four different outcomes (represented as four pairs of flipped coins.) Using (Template:Math,Template:Math) to represent (heads,tails) we can list these four outcomes,[1]

We shall use the symbol Template:Math (Omega) to denote the number of messages that can be sent by these two coins:

The popularity of this inspired me to seek other ways to use coins to understand what is known as Shannon's entropy. Table 1 extends Figure 1 to include the relationship between entropy and the number of messages (called Omega) one might generate by displaying Template:Math coins as either "heads" or "tails". But neither the table, the figure, nor even the formula,

captures the full complexity associated with Shannon's information entropy. A hint at this complexity can be seen in the following question:

If entropy is equivalent to the number of coins used to convey information, how should one deal with a "one-sided coin?

Such a question must be viewed outside the scope of mathematics. The fact that one rarely hears "fire!" in a crowded theater does not remove that word from the lexicon of how the audience should behave in a theater. If coins<rev>i.e. a base-1 "alphabet"</ref> Shannon's entropy, was defined so coins contribute nothing to the entropy

Define S as simple entropy and note that it is the number of "coins" used. Shanon's entropy, H, defined so that it also depends on the probability with which a coin is used. Connect probability to frequency.

Additive property I

Figure 2 illustrations how Elephant communicates with the animal kingdom every morning by displaying 5 coins, using his trunk to indicate where the sequence begins. As shown in Figure 3, Elephant asks Crow to display the first 3 coins and Rat to display the last two coins. When acting on behalf of Elephant, Crow and Rat each point to the first coin in their message, and the animal kingdom understands that Crow is to be read first. In this case Crow and Rat are not sending independent signals, but are instead following Elephant's instructions. On the other hand, if Crow and Rat are act independently, Crow controls 3 bits of entropy, while Rat controls 2 bits. The relationship between acting dependently and independently can be summarized as:

Elephants total, or net, entropy equals the sum of the entropies controlled by Crow and Rat, but the Template:Math number of messages Elephant can send equals the product of what Crow and Rat could send if they act independently:

Template:Spaces Template:Spaces Template:Spaces

Entropy is independent of the "alphabet" or "language" used

Neither our simple entropy , nor the Shannon entropy do does not distinguish between the "language" or "alphabets" one might use when sending messages. Rat could equivalently send the messages by displaying two coins.[2] Or messages could be expressed using binary numbers,[3] or even four Arabic numbers using the four-sided die shown in Figure 3.

First derivation of Shannon entropy

Expectation value reviewed

The expectation, or "average" value of the four integers:

The fractions Template:Math,Template:Math, and Template:Math, refer to the probabilities of Template:Math being Template:Math, Template:Math, and Template:Math, respectively. This permits us to write the expectation value as a sum involving all probabilities:

Additive property revisited

where and

where the logarithm's base is two:

log2

where and

where the logarithm's base is two:

Wheel of fortune

Use fact that, to write this as.

Another path to Shannon entropy

Move

Moved to Draft:Information theory/Permutations and combinations: ,

Don't move

Employ fact that is often a very large number, since Template:Math is often a large number. A simplified version of Sterling's formula, becomes for logarithms to base two:

table

| Excel | Sterling | |

|---|---|---|

| 3 | 0.5283 | 0.5739 |

| 6 | 0.6511 | 0.6628 |

| 12 | 0.7459 | 0.7489 |

| 24 | 0.8120 | 0.8127 |

| 48 | 0.8549 | 0.8551 |

| 96 | 0.8814 | 0.8815 |

| 192 | 0.8973 | 0.8973 |

| 384 | 0.9065 | 0.9065 |

| 768 | 0.9117 | 0.9117 |

| 1536 | 0.9147 | |

| 3072 | 0.9163 | |

| 6144 | 0.9172 | |

| 12288 | 0.9177 | |

| 36864 | 0.9181 | |

| 147456 | 0.9182 | |

| 0.9183 |

gamma from wikipedia

w:special:permalink/1040737805#Versions_suitable_for_calculators

See also

- Permutation notation by user:Watchduck

- w:Permutation

- w:Combination

- w:Combinatorial proof

- w:Mathematical_proof

- b:Proof_by_Induction

- ↑ Figure 1 is currently being used by Wikipedias in ten other languages, and a brief visit to some of them serves to remind reader that humans communicate using a variety of alphabets: الصفحة_الرئيسية (Arabic)Template:Spaces⋅Template:SpacesČeská (Chech)Template:Spaces⋅Template:SpacessDansk (Danish)Template:Spaces⋅Template:SpacesEnglishTemplate:Spaces⋅Template:Spaceseuskarazko (Basque)Template:Spaces⋅Template:Spacesویژه:آمار (Persian)Template:Spaces⋅Template:Spaces코리안(Korean)Template:Spaces⋅Template:Spacesਪੰਜਾਬੀ (Punjabi)Template:Spaces⋅Template:Spacesјезик (Serbian)Template:Spaces⋅Template:SpacesУкраїнці (Ukrainian)Template:Spaces⋅Template:Spaces中文 (Chinese)

- ↑ ...with the understanding that order matters: HT and TH are different messages.

- ↑ 00, 01, 10, 11 (with perhaps the understanding that 0 means "tails" and 1 denotes "heads"

EVERYTHING BELOW THIS IS SCRAPBOOK

Images used in this draft

Two coin examples

additive property of shannon entropy

In our example, the crow's entropy is , and . The eight outcomes for the crow's three coins have probabilities:

for the outcomes respectively. Similarly, the set of Rat's four possible outcomes .[1]

short

where and

Compression image

Image gallery

All images from the same randomized version of file:Image entropy with Inkscape First 01.svg

-

svg

-

png

-

svg × 4

-

png × 4

blurbs

I thought of calling it "information", but the word was overly used, so I decided to call it "uncertainty". [...] Von Neumann told me, "You should call it entropy, for two reasons. In the first place your uncertainty function has been used in statistical mechanics under that name, so it already has a name. In the second place, and more important, nobody knows what entropy really is, so in a debate you will always have the advantage."

Rules are needed to keep entropy "simple"

Crows messages are {000,001,010,011,100,101,111}, which corresponds to the integers 0-7 in base. The Crow could send more messages by choosing whether to include leading zeros, and the sole reason for forbidding such signals is to keep the system as simple as possible. For example, we "declare" the single sided coin to be incapable of conveying information because , and not because it is impossible to convey a message by not flipping a coin. Later, this convention that silence never conveys information will lead us to an alternative path to Shannon's formula for entropy.

H is Shannon entropy: H≤S

Shannon entropy is a generalization of the simple formula, of the simple formula, , that can be used to adjust for situations where some messages are either never sent, or are less likely to be sent. It involves the probability of certain messages be sent or not sent, and the formula can be used regardless of the mechanism responsible the messages are selected.

The vagueness associated with whether these probabilities are estimated by observation of many signals, or determined by some unknown means does not prevent Shannon's entropy from being useful. For example:

- Gadsby is a 50,000 word novel that does not contain the letter "e". Any casual analysis leads to the conclusion that the omission of the letter is deliberate.

- Those engaged in espionage can make inferences about a signal in which the "alphabet" appears to be a uniformly random sequence of letters can is likely to be encrypted.[2]

- In written English, the letters "q" and "k" have the same pronunciation, which suggests that our text documents could be made smaller by removing the letter "q", for example, spelling "quick" as "quik". This is not much of a concern for text documents, but images and movies can be more easily stored or transmitted if compression is used.

The entropy of Rat's message does not depend on whether the binary system of two coins is used, or whether Rat displays the information using the four sided die shown in Figure 3.

Math

Letting, Template:Math = "heads" and Template:Math= "tails", these 4 outcomes can be described in a number of different ways. Four such "outcomes" can also be counted in other ways, for example:

This is the simplest example of the relationship between the

REWRITE: Figure 1 is currently being used by Wikipedias in five different languages.[3] It illustrates the fact that the value of Shannon Entropy is the number of coins flipped:

, if the coins are “fair”, i.e. . The simplest example of an “unfair” coin would be one with either two “head” or two “tails”. Any reasonable measure of information content would ignore that coin and yield an entropy of The scope of informal peek into the entropy is limited:

Figure 2:mThe elephant with 5 coins can send, different messages, which is associated with 5 bits of information (if the coins are "fair"). If three coins are given the the crow and two coins to the rat, the crow can send, different messages, while the rat can send only, different messages. Even though the rat an crow can independently send only different messages, together, they possess the same number of bits of information, as the elephant had. This illustrates the additive nature of entropy.

Assume all outcomes are equally probable

I find Crow the more illuminating, in part due to confusions 4=2×2 versus 4=2+2. it is often to count not coins but outcomes. For rat equivalent to four sided coin. The "alphabet" used to send information is not important. Coins has advantage and disadvantage. DELETE

Appendix

Reminders

- Information theory/Shannon entropy Reminders:

- Include links from Information theory and Introduction_to_Information_Theory

decisions

- REFERENCE Wikipedia Permalink section \#Characterization for two rigorous paths to Shannon's formula

Characterization

From w:Entropy (Information theory) Let be the information function which one assumes to be twice continuously differentiable, one has: This differential equation leads to the solution for any . Property 2 leads to . Properties 1 and 3 then hold also.

- What is the entropy S when one of the “unfair” in that the two outcomes occur with unequal probabilities.[4]

- How can this definition be extended to include entities of more than two outcomes?[5]

- What does it mean to say t

- hat entropy is an “extensive property”?

- How can this essay contribute to higher education?

Expected number of bits and entropy per symbol

w:Variable-length_code#Uniquely_decodable_codes helped me with w:Shannon's source coding theorem

Number of messages

This has a trivial resolution if we note that the exponential function, and logarithmic function, are mutually inverse functions:[6]

![]() For example, the messages associated with the throw of a six-sided die has an entropy of

For example, the messages associated with the throw of a six-sided die has an entropy of

micro/macro state grand ensemble/Gibbs entropy

- These words are used differently and should be avoided

- w:Microstate (statistical mechanics)

- http://pillowlab.princeton.edu/teaching/statneuro2018/slides/notes08_infotheory.pdf uses Lagrangian multiplier to establish extremal See (8.19), or equivalently 8.2.2. See also efficiency at (8.22)

Since this essay is about the entropy of information,

large numbers

w:Observable_universe#Matter_content—number_of_atoms 10^80

- no attempt to explain. This introduction the formula is not rigorous. purpose familiarize properties. slowly build, begin w info result of randon generator flipping N coins, ignoring surpisal. N evolves to shannon entropy.

- The elephant shown to right uses 5 coins to send messages. Sometimes he gives three coins to the crow and two coins to the rat so they can also send messages. The number of possible messages available to each animal depends on how many coins are used.

- kilobytes is a smallfile

Links

- b:An Intuitive Guide to the Concept of Entropy Arising in Various Sectors of Science Uses Stirling approximation to show the p log p arises from large amounts of entropy.

- towardsdatascience.com pages can be accessed for free at first, but later it seems you need to pay.

- https://planetcalc.com/2476/

- w:Stirling's approximation

- https://math.stackexchange.com/questions/331103/intuitive-explanation-of-entropy

- https://en.wikipedia.org/w/index.php?title=Entropy_in_thermodynamics_and_information_theory&oldid=1020584746#See_also

- Interesting links, mostly to WP

- https://en.wikipedia.org/w/index.php?title=Entropy_(information_theory)&oldid=1033726547#Characterization

- 2 derivations of PlogP stuff.

- I=-log p is information content (low p means big I)

- already referenced!

- https://en.wikipedia.org/wiki/Entropy_(statistical_thermodynamics)

- Uses log n! = n log n to justify PlogP stuff

- w:Binary entropy function graphs ,

Boltzmann or Gibbs?

- ↑ Here denotes that is an element of a given set. The use and helps permits us to estabish that for Crow needs not be numerically equal to for Rat.

- ↑ When hiding information, steps are sometimes taken to ensure that the signals are not too random. Criminals selling of illegal drugs might try to make the messages seem innocent by pretending to be making deliveries of pizza and beverages.

- ↑ 4 wikis

- ↑ For example, what if one coin is weighted so that, and

- ↑ For example, a pair of six sided dice is used to generate outcomes.

- ↑ An easy way to remember this is that if you see , remember that the logarithm is the exponent.